DiffPhysDrone

Source: https://github.com/HenryHuYu/DiffPhysDrone

A swarm of drones can fly as fast as birds through uncharted forests, urban ruins, and even obstacle-filled indoor spaces, without relying on maps, communications, or expensive equipment. This vision has now become a reality!

A research team from Shanghai Jiao Tong University proposed an end-to-end method that integrates drone physical modeling and deep learning. This study successfully deployed a differentiable physical training strategy to a real robot for the first time, realizing a truly "lightweight, deployable, and collaborative" end-to-end autonomous navigation solution for drone swarms, significantly surpassing existing solutions in robustness and maneuverability.

This result has been published online in Nature Machine Intelligence, with Master Zhang Yuang, Dr. Hu Yu, and Dr. Song Yunlong as co-first authors, and Professor Zou Danping and Professor Lin Weiyou as corresponding authors.

Background

In recent years, aerial robots have been widely used in complex dynamic environments such as search and rescue, power inspections, and material delivery, placing higher demands on the agility and autonomy of aerial vehicles. Traditional methods typically use map-based navigation strategies, separating the perception, planning, and control modules. Although some results have been achieved, their cascaded structure is prone to error accumulation and system delays, making it difficult to meet the needs of high-speed dynamic scenarios and multi-robot collaboration. Learning-based methods, such as reinforcement learning and imitation learning, perform well in single-robot flight, but face problems such as high training costs and difficulty in designing expert strategies in multi-robot systems, which limits their application in swarm intelligence. Therefore, how to efficiently train robust and scalable vision-driven multi-robot collaborative strategies remains a key issue that needs to be addressed.

Innovative achievements

To address this problem, the team proposed an end-to-end approach that combines deep learning with first-principles physics modeling through differentiable simulation. Using a simplified point-mass physics model, the team directly optimizes the neural network control policy by backpropagating the loss gradients in a robotic simulation. Experiments demonstrate that, on the training side, this combined physics-based and data-driven training approach requires only 10% of the samples required by existing reinforcement learning frameworks to achieve comparable performance. On the deployment side, the approach demonstrates excellent performance in both multi-agent and single-agent tasks. In multi-agent scenarios, the system exhibits self-organizing behavior, enabling autonomous collaboration without the need for communication or centralized planning. In single-agent tasks, the system achieves a 90% navigation success rate in unknown and complex environments, significantly outperforming existing state-of-the-art solutions in robustness. The system operates without a state estimation module and can adapt to dynamic obstacles. In a realistic forest environment, it achieves navigation speeds of up to 20 meters per second, double that of previous imitation learning-based approaches. Remarkably, all of this functionality is implemented on a low-cost computer priced at only 150 yuan, less than 5% of the cost of existing GPU-equipped systems.

In a single-machine scenario, the network model was deployed on a drone and tested in various real-world environments, including forests, urban parks, and indoor scenes with static and dynamic obstacles. The network model achieved a 90% navigation success rate in unknown and complex environments, demonstrating greater robustness than existing state-of-the-art methods.

In a real forest environment, the drone achieved flight speeds of up to 20 meters per second, twice the speed of existing imitation learning-based approaches. Zero-shot transfer was achieved in all test environments. The system operates without GPS or VIO positioning information and can adapt to dynamic obstacles.

Figure 1 Multi-aircraft flight

Figure 1 Multi-aircraft flight

In a multi-drone collaborative scenario, the network model was deployed on six drones to perform tasks such as traversing complex obstacles in the same direction and exchanging positions. The strategy demonstrated high robustness in scenarios involving traversing doorways, dynamic obstacles, and complex static obstacles. In experiments involving multiple drones traversing doorways and exchanging positions, the strategy demonstrated self-organizing behavior without the need for communication or centralized planning.

Key ideas

Embedded with physical principles, drones "learn to fly on their own"

End-to-end differentiable simulation training: The policy network directly controls the drone's motion, and backpropagation is implemented through a physical simulator.

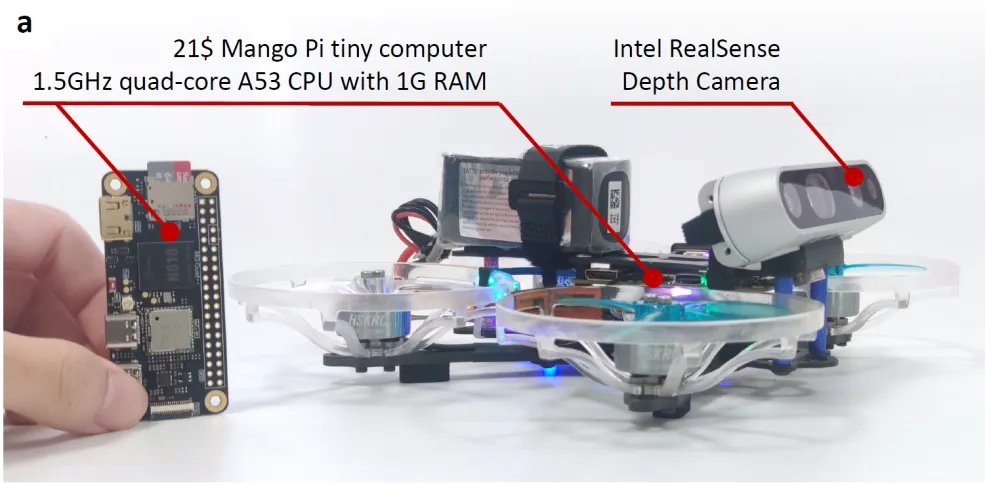

Lightweight design: The entire set of end-to-end network parameters is only 2MB and can be deployed on a computing platform of 150 yuan (less than 5% of the cost of a GPU solution).

Efficient training: Convergence takes only 2 hours on an RTX 4090 graphics card.

Figure 4 Low-cost computing power platform

Figure 4 Low-cost computing power platform

The overall training framework is shown in the figure below. The policy network is trained by interacting with the environment. At each time step, the policy network receives a depth image as input and outputs control instructions (thrust acceleration and yaw angle) through the policy network.

The differentiable physics simulator simulates the particle motion of the drone according to the control instructions and updates the status:

-53d4e272e717171aeb4339401dac801b.webp)

In the new state, a new depth image can be rendered and the cost function can be calculated.

The cost function consists of multiple sub-terms, including speed tracking, obstacle avoidance, and smoothing. After the trajectory is collected, the cost function can be used to calculate the gradient through the chain rule (red arrow in Figure 1) to achieve backpropagation, thereby directly optimizing the policy parameters.

"Simplicity is beauty" training tips

- Simple Model: Use particle dynamics instead of complex vehicle modeling.

- Simple images: Low-resolution rendering + explicit geometry modeling to improve simulation efficiency.

- Simple network: three-layer convolution + GRU timing module, compact and efficient.

In addition, by introducing a local gradient attenuation mechanism during training, the gradient explosion problem during training is effectively solved, allowing the drone's "focus on the present" maneuvering strategy to naturally emerge.

Method comparison

Reinforcement learning, imitation learning, or physics-driven?

Currently, the mainstream training paradigms for embodied intelligence fall into two categories: reinforcement learning (RL) and imitation learning (IL). However, both approaches face significant bottlenecks in efficiency and scalability:

Reinforcement learning (such as PPO) often adopts a model-free strategy, completely disregarding the physical structure of the environment or the controlled object. Its policy optimization mainly relies on sampling-based policy gradient estimation, which not only leads to extremely low data utilization, but also seriously affects the convergence speed and stability of training.

Imitation learning (e.g., Agile [Antonio et al. (2021)]) relies on a large amount of high-quality expert demonstrations as a supervisory signal. Acquiring such data is often expensive and difficult to cover all possible scenarios, thus affecting the model's generalization and scalability.

In contrast, the training framework based on differentiable physical models proposed in this study effectively combines the advantages of physical priors and end-to-end learning.

By modeling the aircraft as a simple mass point system and embedding it into a differentiable simulation process, we can directly perform gradient backpropagation on the parameters of the policy network, thus achieving an efficient, stable, and physically consistent training process.

The study systematically compared three methods (PPO, Agile, and this research method) in the experiment. The main conclusions are as follows:

Training efficiency: On the same hardware platform, this method can achieve convergence in about 2 hours.

This is much shorter than the training cycle required by PPO and Agile. Data utilization: Using only about 10% of the training data, this method surpasses the PPO+GRU solution that uses the full data in terms of policy performance.

Convergence performance: During the training process, this method exhibits lower variance and faster performance improvement, and the convergence curve is significantly better than the two mainstream methods.

Deployment effect: In real or nearly real obstacle avoidance tasks, the final obstacle avoidance success rate of this method is significantly higher than that of PPO and Agile, showing stronger robustness and generalization ability.

This comparison result not only verifies the effectiveness of "physical drive", but also shows that when we provide the correct training method for the intelligent agent, strong intelligence does not necessarily require massive data and expensive trial and error.

-bc2c7b419ff83380cdbb5d9841dfa15c.webp) Figure 5: This research method surpasses the existing method (PPO+GRU) with 10% of the training data, and its convergence performance is much higher than the existing method.

Figure 5: This research method surpasses the existing method (PPO+GRU) with 10% of the training data, and its convergence performance is much higher than the existing method.

-e1e51579bcea475d8d395a739043cb60.webp) Figure 6 Comparison of model deployment obstacle avoidance success rates

Figure 6 Comparison of model deployment obstacle avoidance success rates

Seeing flowers in the fog

Explainability Exploration

Although end-to-end neural networks have shown strong performance in autonomous flight obstacle avoidance tasks, the opacity of their decision-making process remains a major obstacle to practical deployment.

To this end, the researchers introduced the Grad-CAM activation map tool to visualize the perceptual attention of the policy network during flight.

The figure below shows the input depth map (top row) and its corresponding activation map (bottom row) under different flight states.

-dfec4d6c49111ea7c28d4f5f0d2e1d0e.webp) Figure 7. By observing the activation map, the activated area is strongly correlated with the most dangerous obstacle.

Figure 7. By observing the activation map, the activated area is strongly correlated with the most dangerous obstacle.

We can observe that the network's high response areas are highly concentrated near obstacles in the flight path where collisions are most likely, such as tree trunks and the edges of pillars. This suggests that, despite not explicitly monitoring these "dangerous areas" during training, the network has learned to focus on the areas with the greatest potential risk. This result conveys two important messages:

Not only does the network successfully avoid obstacles at the behavioral level, but its perception strategy itself also has certain structural rationality and physical interpretability; and interpretability tools also help us further understand the "hidden rules" behind the end-to-end strategy.

- Paper link: https://www.nature.com/articles/s42256-025-01048-0

- Project address: https://henryhuyu.github.io/DiffPhysDrone_Web/

- Code repository: https://github.com/HenryHuYu/DiffPhysDrone

- Jiaotong University Wisdom: https://news.sjtu.edu.cn/jdzh/20250711/212756.html

- New Zhiyuan: https://mp.weixin.qq.com/s/V6VdRVuEt_cvalSLYV46eA